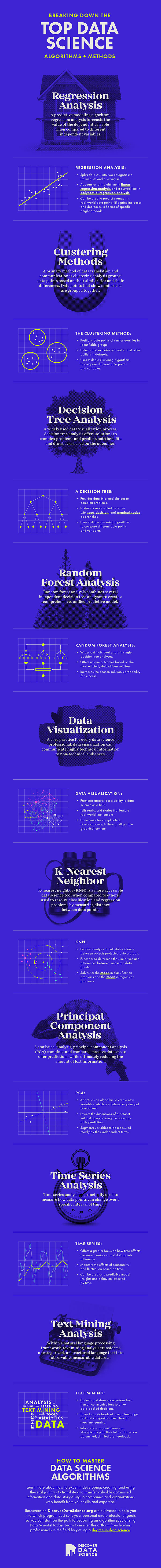

Breaking Down the Top Data Science Algorithms + Methods

As computing technology continues to advance, the amount of information that we can collect and interpret is growing exponentially. For practically every subject imaginable, data can be collected, compared, analyzed, visualized, and made actionable in a meaningful way. Now with more data being produced than ever before, proactive and innovative data science algorithms are becoming increasingly important tools to make sense of massive, often disconnected datasets.

In this article, we dive into some of the most popular and in-demand algorithms for data science applications. By breaking each of them down in terms of how they appear in the real world, these specific algorithms, tools, methods, and practices demonstrate the flexibility and potential for data science as a field.

- What Regression Analysis Tells Us

- Clustering Methods

- Decision Tree Analysis

- When to Apply Random Forest Analysis

- Data Visualization and Its Significance

- Understanding K-Nearest Neighbor

- When to Use Principal Component Analysis

- Time Series in Data Science

- Text Mining Analysis

- How to Master Data Science Algorithms

- Top Data Science Algorithms + Methods Infographic

What Regression Analysis Tells Us

Based in a machine learning environment, regression analysis is an algorithm that ultimately functions as a predictive model. The predictions come out of relations between different independent variables and how they compare with a single dependent variable. By incorporating regression analysis into your data science problem-solving toolkit, you can begin to more precisely forecast how the value of the dependent variable you’re measuring and comparing.

Multiple forms of regression analysis can be used based on the scope and direction of the project. Centrally, though, the overall dataset that’s being manipulated and/or analyzed should be divided into two different categories: one set for training and the other for testing. Through regression analysis, these two different groups serve as the sources of comparison that will structure the predictive model. Specifically, the training dataset will use its own data points to build a line projected on a graph – one that can be straight or curved depending on the approach and the data – that plots the independent variable in relation to the dependent variable.

Separately, the training dataset model will then be used to predict the values of the dependent variable found in the testing dataset. These predictions can be calculated through a number of ways all under the regression analysis umbrella, from R-squared (otherwise known as the coefficient of determination), the Pearson correlation coefficient, and the root-mean-square deviation, to name a few. When the training and testing datasets are compared and executed through programming languages like Python, R, or SQL, you can gain a better understanding of the overall accuracy score. If that score doesn’t reach a certain accuracy threshold, you can then tamper with how you have split the datasets.

Additionally, different kinds of regression analysis can be used to build different, sometimes potentially more informed forecasts. This improvement usually happens when you use polynomial regression analysis instead of linear regression analysis. To make this jump, which in some scenarios isn’t really necessary depending on the variables with which you’re working, you need to use different equations. Centrally, the differences between the two will become obvious in how the graphs look and how the projections are cast: linear regression analyses will feature a straight line (or lines), while polynomial regression analysis will contain a curve as it covers different degrees.

Why is regression analysis used?

Through this machine learning data science algorithm, you can build models that can make predictions that have real-world implications. For example, data analysts who work in the real estate industry will likely use regression analysis, among other data forecasting techniques, to forecast price increases and decreases in specific neighborhoods. These kinds of models can then help real estate investors make a data-driven decision that will help them grow their business or save them from making a major financial mistake.

Clustering Methods

Another vital machine learning and data mining algorithm, clustering analysis works to section off different datasets based on their similarities. Put differently, clustering analysis functionally places objects closer to other objects they show similarities to and apart from objects that present greater differences. When these objects are positioned together, they form clusters that are segregated from other clusters that house distinct objects.

Clustering analysis is a significant data science tool because it enables an algorithm to independently discover patterns that the clustered data points reflect. Because the act of clustering these different data points makes for easily identifiable groups within a dataset or pulled from different datasets, clustering analysis is also important for detecting and explaining outliers and other anomalies.

Clustering analysis can be performed through a number of avenues and channels. According to the graphics processing unit juggernaut NVIDIA, clustering as a data science practice can be utilized “to identify groups of similar objects in datasets with two or more variable quantities.” Additionally, most data analysts and data science professionals will rely on multiple clustering algorithms to conduct an effective, comprehensive, and accurate analysis.

Why is clustering analysis used?

In the real world, clustering analysis has played a major role across an array of industries, including genetics, x-ray and imaging technologies, market research, and cybersecurity, among others. In the context of market research, clustering methods inform marketers for major brands across the world how to identify targeted audiences. By incorporating a large number of datasets that cover demographic, geographic, psychographic, and socio-economic information for different communities and populations, these marketers understand better how different groups of people are unexpectedly similar or different. Through clustering analysis, marketers for these major companies can then create effective messaging that resonates with the greatest number of potential customers, based on information they wouldn’t have been able to more commonly deduced.

Decision Tree Analysis

Decision tree analysis gets its name from projecting a data visualization process that resembles a tree. As a tree of branched info, it represents different solutions that have unique benefits and drawbacks connected to different outcomes – which are all in response to a central problem. Through machine learning and data mining, decision tree analysis is used to help organizations across private, public, and governmental sectors make more data-informed choices.

For organizations with complex and difficult problems, decision tree analysis can open the door to identifying otherwise unknown opportunities. In practical terms, the decision tree model visually reads from right to left. Starting on the left, the core problem or circumstance breaks out into nodes that represent the different avenues from the central issue. These nodes typically have three different categories:

- Root nodes function as the central compiler; the “root,” that then divides into the many branches of the tree.

- Decision nodes act as subsects of the root nodes presenting other possibilities or choices from the root issue. In decision tree analysis, these nodes traditionally take the shape of squares.

- Terminal nodes are usually represented as triangles; terminal nodes are the last outcome or possibility that offer no break-off decisions.

Importantly, while decision tree analysis was historically performed manually with data inputs, newer software now makes it possible for the analysis to comb through massive data sets through automation. By automating this decision tree analysis process, choices can ultimately be more informed by data, which make the outcomes in the model more realistic and more opportunity oriented.

Why use decision tree analysis?

This data crunching method is used across industries and sectors to help organizations make data-driven, balanced decisions. For example, in financial spaces, investors, hedge funds, and wealth management professionals often use a variety of decision-making models to choose their best investment opportunity. When these professionals use decision tree analysis, they are able to apply a number of variables attached to different data sets that can give them a better understanding of how a potential investment option may pan out. By assessing the history, the potential, and the risk of an investment through an interactive data model like decision tree analysis, investors will be able to put their money into options that have a higher probability of earning money with lower overall risk.

When to Apply Random Forest Analysis

Another data science algorithm that functions to solve classification problems, random forest analysis is based on a collection of decision tree analyses. At its core, random forest analysis compiles several individual decision trees, categorizes them based on their own individual predictions, and makes a comprehensive prediction. The logic here is that a unified predictive model that works with any number of individual decision tree analyses will be more effective than these decision tree analyses offering predictions independently.

There are many advantages to running a random forest analysis based on multiple decision tree analyses. For one, the predictive errors that appear in specific decision tree analyses will generally not appear or make a difference in the comprehensive random forest analysis. Additionally, because these independent decision tree analyses are measuring and analyzing the same data but offering unique outcomes, the random forest analysis will be able to present the most efficient, most data-informed outcome. This process can tremendously improve the chosen solution’s probability for success.

Data Visualization and Its Significance

To date, there are practically countless data visualization algorithms that can be used in different programs and environments. From simpler Microsoft Excel functions to more advanced, more conceptual representations of Big Data, data visualization helps non-technical audiences understand how data points appear in or affect the real world. A core responsibility for every data science professional, effective data visualization methods can communicate digestible and compelling messages in a graphical form.

According to the leading data visualization platform Tableau, data visualization methods and practices ultimately promote a more accessible path into understanding the impact of data. By presenting important data points in the form of a chart, a graph, a map, an interactive table, or an infographic, users can more easily notice trends, patterns, and even anomalies. Additionally, when data is visualized with a clear message and through a clear narrative, the graphical representation can be especially convincing. Indeed, data storytelling is an increasingly important tool for every data science professional because of its effective application in virtually every industry.

Why use data visualization processes?

Data visualization invites people to understand statistics, large data sets, and higher-level concepts practically at a glance. Because people are able to grapple with these kinds of data points and their conclusions more quickly, the information presented in the data visualization won’t prove overwhelming. This benefit means that several organizations and data science professionals have been able to communicate complicated concepts in straightforward graphical materials.

As an example, the Pew Research Center published “The Next America,” an interactive study that explained how the United States has changed demographically since the 1950s. The study also works with massive datasets to project how the country’s population will transform demographically through 2060. In terms of gender, race, socioeconomic status, political affiliation, and other identifying markers, the model offers a visual narrative informing users what the country used to look like and what it will look like in the future. All of this information is based on effective data collection and analysis, which has been transformed by these researchers into a compelling visualization of the data.

Understanding K-Nearest Neighbor

As a supervised machine learning algorithm, K-nearest neighbor (KNN) is a more accessible data science tool that measures distances between data points mostly to resolve classification and regression issues. These problems can be defined as followed:

- Classification problem: An issue where its output features a discrete value. In other words, the values are defined specifically as one thing. In most classification data models, there is a predictor and a label – all based on collected data.

| Dog age | Is potty trained |

| 4 | 1 |

| 7 | 0 |

| 3 | 1 |

| 2 | 1 |

| 8 | 1 |

| 1 | 0 |

| 7 | 1 |

| 2 | 0 |

- In this example model, the age of the dog can be viewed as the predictor, while the potty-trained status will be lumped into the label category. These two columns in conversation with each other pose a classification problem, because their values are not necessarily mathematical. But by working with this dataset with an algorithm like KNN, you will be able to make predictive and accurate conclusions based on this classification problem.

- Regression problem: Beyond classification problems, regression issues arise when we want to predict the values of dependent variables based on information that our independent variable, or groups of independent variables give us.

Because it functions as a supervised machine learning algorithm, you will have to clearly define your terms, your measured variables, and your data points for the algorithm to work properly. In other words, when you apply a dataset with clearly defined terms and variables, a supervised machine learning algorithm like KNN will enable you to calculate the distance between data points that it projects onto a graph.

Why use KNN?

K-nearest neighbor analyses are the most relevant when they’re used to solve classification and regression issues. For classification problems, KNN identifies which label appears most often based on its relation to what value you assign to K. Separately, KNN can accurately solve regression problems when it calculates the mean of the labels featured in the measured datasets, depending on what value K features. Functionally, KNN analysis works to determine similarities and differences between measured data points.

When to Use Principal Component Analysis

Considered widely as an adaptive and flexible data science tool, principal component analysis (PCA) is a relatively new method that is relied on when there are especially massive datasets. According to the scholarly article Principal Component Analysis: A Review and Recent Developments, researchers found that PCA helps data science professionals interpret large datasets more effectively while also greatly reducing the amount of lost information.

These researchers identified that PCA algorithms accomplish this by adaptively creating new variables defined as the principal components in this process. The method is an important analysis that ultimately maintains the most statistical data while it makes predictions based on that data.

Why use principal component analysis?

One of the most important reasons for using PCA rests in the dimensions of the data you’re wanting to analyze. When working with massive datasets, there’s often a high number of variables that can make the dimensions of the dataset much more difficult – if not impossible – to measure. By working with PCA, you will be able to lower that overall number of variables and compartmentalize them. This means that you will be able to segment your variables and measure your data mostly in independent terms. Importantly, this method will require a heavy reliance on matrix calculations and linear algebra.

Time Series in Data Science

One of the most important variables across practically every scientific field, time weighs heavily in many data analysis practices. Time series analysis is a method used by data science professionals to measure and draw conclusions from data points that span a specific amount of time. The central goal with this kind of analysis is to demonstrate how measured variables and data points change as time passes. In other words, time series analysis measures a certain subject and how that object will change with time.

This kind of analysis requires a lot of data. Time can traditionally be a difficult variable to measure in relation to other monitored variables, so with more data points that offer more information, the time series analysis will have a much greater chance of offering accurate predictions. According to data visualization platform Tableau, “time series analysis is used for non-stationary data—things that are constantly fluctuating over time or are affected by time.”

Why use time series analysis?

Time series analysis has grown increasingly valuable to organizations in a variety of industries. From medicine to retail shopping, time series analysis provides unique, data-driven insights that can shape an organization’s strategic planning. For example, marketers for major department stores may have historically worked with time series analysis to strategically stock certain items in certain locations around specific holiday seasons. But through the advent of online shopping, these marketers would likely draw different conclusions about how in-person sales have changed in those same holiday periods. By using time series analysis that integrates in-person sales and seasonal shifts, these major retailers can make different decisions that ultimately drive sales more efficiently.

Text Mining Analysis

On the horizon of real-world data science applications, natural language processing is a machine learning tool that collects and draws conclusions from human communications. Text mining analysis relies on natural language processing to transform uncategorized, disconnected text into measurable datasets.

In our technological moment, where customer reviews appear on countless social media platforms, search engines, and mobile apps, companies can take advantage of this infinite feedback loop. By working with these user-generated responses, organizations can understand better how they’re faring in the market – and what changes they need to make to improve performance.

Why use text mining analysis?

Just as the availability of this consumer information (both from potential and existing customers) can shape how companies offer their products and change their strategies, the practice of mining this information can also pose unique challenges. Text mining analysis is an algorithmic approach that can take large data sets filled with unstructured information and categorize it into data-driven insights.

For example, an appliance company that specializes in vacuum technology can take direct advantage of text mining analysis. By compiling social media posts, product reviews, and other customer feedback, the vacuum company can learn what customers appreciate about its products and what’s working. Conversely, that same vacuum business can begin to plan new designs in its future products based on how consistently critiques appear in the text mining analysis reports.

How to Master Data Science Algorithms

While these descriptions of some of the most popular data science algorithms are helpful introductions, the best way to gain an in-depth understanding of their use cases and how to master them, is learning from professionals with experience in the field. By getting a degree in data science or studying algorithmic specializations, you will be able to understand how each of these algorithms can be applied individually or together in order to solve complex problems. With a solid degree program and training, there is high potential that future data scientists such as yourself can pioneer new algorithmic methodologies over the course of your career and contribute to the industry as a data science innovator. Learn more today from the resources on DiscoverDataScience.org about getting a Master’s in Data Science.

Copy Link to Share and Post this Inforgraphic